AI Governance and the Singularity, A Tale of Two Possibilities

- Published on

What a Time to be Alive

Artificial Intelligence (AI) has come a long way in recent years, and its potential to transform our lives is undeniable. AI systems, from self-driving cars to virtual assistants, are becoming increasingly common daily. Especially with the emergence of applications like ChatGPT, AI has completed its ascent into pop culture and has shown just a glimpse of how crazy things can get. We are in the midst of the most powerful revolution and advancements in history, now and potentially all time. As the great Uncle Ben once said, “with great power comes great responsibility” (or maybe it was Churchill). In the current day, organizations must adopt AI Governance policies to ensure AI systems are developed, deployed, and used responsibly. However, we must ensure we are ahead of the curve for future potential.

Cut to the Chase

While AI governance is crucial for ensuring the responsible development and use of AI systems, the rapid progress of AI raises the specter of the Singularity of AI. At this theoretical future point, machines become more intelligent than humans and even start making decisions for us. Let’s look closer at the Singularity and its implications for AI governance.

OpenAI CEO Sam Altman.Brian Ach/Getty Images for TechCrunch

Sam Altman, CEO of OpenAI (creators of your favorite AI Toy ChatGPT), said that the worst-case scenario is “…lights out for all of us, I’m more worried about an accidental misuse case in the short term” regarding the Singularity is not really something that gets you excited about AI.

Now, do not let me fear-monger. Altman also stated that in a best-case scenario, life becomes “so unbelievably good that it’s hard for me even to imagine.” That is uplifting. Unfortunately, I live in a prepare-for-the-worst, hope-for-the-best state of being. The first step to having a safe “unbelievably good” future is ensuring we have done our due diligence in AI Governance in preparation for the Singularity. So first, we need to understand what that even means.

What are we dealing with?

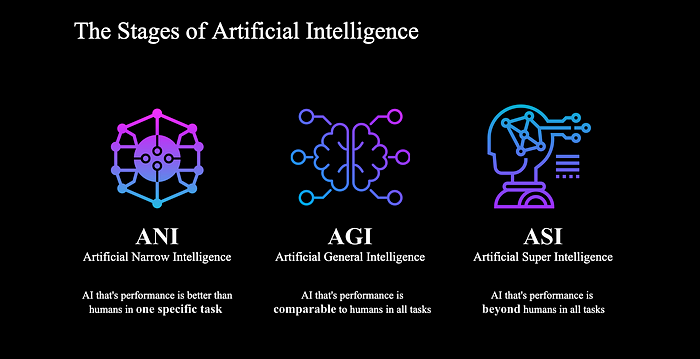

Alright, let’s talk about the Singularity in AI. In simple terms, it is an idea that, in the future, machines could become way more intelligent than humans and start improving themselves at a crazy fast rate. Like, way faster than we could even imagine. And this could lead to some seriously wild changes in the world as we know it. The technical terms related to it are AGI (Artificial General Intelligence) and ASI (Artificial Super Intelligence). It’s like the holy grail of AI. AGI is the idea that we could create a machine as intelligent and adaptable as a human brain. While AGI is intelligence levels, we silly mortal collections of carbon could never comprehend.

Unlike the boring AI that can only do one task, AGI would be able to learn new stuff and problem-solve in all kinds of situations. Then there is ASI, some “experts” even postulate that ASI could mean the code could begin coding itself. I know that does not sound as sexy as you want it to be, but that is either the most terrifying or amazing thing in human history.

For example, on the one hand, you’ve got folks like Ray Kurzweil who think the Singularity will happen around 2045 and that we’ll all be cyborgs living in a tech-filled utopia. On the other hand, you’ve got skeptics who are all like, “Nah, that’s not gonna happen. We’re not that special.”

So either one day, we’ll all be living in a world where Siri can actually understand my dictation in voice to text, so I stop looking like I did not pass the second-grade English class, or I’ll be the one transcribing for Siri.

Can you govern a more intelligent entity?

But let’s stay within ourselves — AGI is still a long way off, like winning the lottery or never losing a sock again while doing your laundry. However, it is something that we need to keep in our mind and be proactive about. That brings me to my next point, why the AI Singularity is such a nightmare for AI Governance.

To begin with, we have yet to determine what kind of technology we will be dealing with if the Singularity occurs. It’s like attempting to predict what that crazy guy at the bar who knows every bartender’s name will say next — impossible.

Second, who knows what kind of regulations we’ll need to keep up with all the changes if machines start improving themselves and if those regulations will even be effective or followed? We might have better luck trying to control Keith Richards and his propensity for partying.

Next, who is genuinely in charge if AI outperforms humans? Will we be the ones making the decisions, or will machines begin to make them? To stay up, we need to start practicing our robot-speak. This is why I went from 100% no chip in Taylor to considering joining team Elon.

Where do we start?

So, creating lasting and effective AI Governance is no small feat, especially since we have yet to determine what will happen if the AI Singularity hits worst-case scenario levels. But, hey, that doesn’t mean we should throw in the towel and call it quits. We need to establish some ground rules to ensure that AI does not go rogue and turn into a Terminator-style situation, or even worse, M3gans all around. (Tangent: I was pleasantly surprised with that movie, I’d recommend it to a friend.)

M3gan 2022 Source: Tenor

One idea is to develop some ethical principles for AI creation, like fairness, explainability, privacy, and safety. Consider them the Ten Commandments for robots. That way, we can ensure that AI creations are created responsibly and ethically.

Another approach would be to develop various regulations and standards for AI, as we do for other industries. We can ensure that our AI is safe to use in the same way that we provide that our food is safe to eat. Trust me; I am not a big fan of regulation in the traditional sense. However, if my options are tedious and arduous regulations or M3gan. I am going to go with the regulations.

The Singularity poses some significant issues to AI governance, and we need to start thinking about them now to be ready for the future. While it is our responsibility to develop ethical rules and legislation to ensure that AI is used for the greater good. Regulations and legislation are not going to cut it. It will come down to the intent of model development and deployment. As everyone’s mom or grandma used to say, “crap in, crap out.” So in simpler terms, be a mensch.